TLDR

Large language models (LLMs) are becoming increasingly common in the field of text processing AI. ChatGPT, powered by OpenAI’s GPT models, is perhaps the most well-known example of an LLM-based tool.

However, there are many other chatbots and text generators, such as Google Bard, Anthropic’s Claude, Writesonic, and Jasper, that also rely on LLMs.

LLMs have been under development in research labs since the late 2010s. However, their adoption has accelerated significantly since the release of ChatGPT, which demonstrated the capabilities of GPT models and brought LLMs into practical use.

While some LLMs have been in development for years, others have been quickly developed to capitalise on the latest trends. Additionally, there are many open-source LLMs used for research purposes. In the following sections, we’ll explore some of the most significant LLMs currently in use.

What is an LLM?

An LLM, or large language model, is a versatile AI text generator. It powers AI chatbots and AI writing generators.

LLMs work like advanced auto-complete tools. They take a prompt and generate an answer using a series of plausible follow-up texts. Unlike keyword-based systems that provide pre-programmed responses, LLM-based chatbots strive to understand queries and respond appropriately.

This flexibility has led to the widespread adoption of LLMs. The same models, with or without additional training, can handle customer inquiries, create marketing content, summarise meeting minutes, and perform various other tasks.

How do LLMs work?

Early LLMs, such as GPT-1, would start generating nonsensical text after a few sentences. However, today’s LLMs, like GPT-4, can produce thousands of coherent words.

To reach this level, LLMs were trained on massive datasets. The specifics vary slightly among different LLMs, depending on how carefully developers acquired rights to the data.

As a general rule, though, they were trained on something akin to the entire public internet and a vast array of published books. This is why LLMs can produce authoritative-sounding text on such a wide range of topics.

Using this training data, LLMs model the relationships between different words (or tokens) using high-dimensional vectors. This process is complex and mathematical, but essentially, each token is assigned a unique ID, and similar concepts are grouped together.

This information is used to create a neural network, which mimics the human brain and is at the core of every LLM.

The neural network consists of an input layer, an output layer, and multiple hidden layers, each with numerous nodes. These nodes determine what words should follow in the generated text, with different nodes having different weights.

Example:

For example, if the input contains the word “Apple,” the network might predict “Mac” or “iPad,” “pie” or “crumble,” or something else entirely. The complexity of the text a model can understand and generate depends on the number of layers and nodes in the neural network, which corresponds to the number of parameters in the LLM.

Training an AI model solely on the open internet without guidance would be both nightmarish and impractical. Therefore, LLMs undergo further training and fine-tuning to ensure they generate safe and useful responses. This process includes adjusting the weights of nodes in the neural network, among other techniques.

All this is to say that while LLMs are black boxes, what’s going on inside them isn’t magic. Once you understand a little about how they work, it’s easy to see why they’re so good at answering certain kinds of questions. It’s also easy to understand why they tend to make up (or hallucinate) random things.

For example, take questions like these:

- What bones does the femur connect to?

- What currency does the USA use?

- What is the tallest mountain in the world?

These are easy for LLMs, because the text they were trained on is highly likely to have generated a neural network that’s predisposed to respond correctly.

Then look at questions like these:

- What year did Margot Robbie win an Oscar for Barbie?

- What weighs more, a ton of feathers or a ton of feathers?

- Why did China join the European Union?

You’re far more likely to get something weird with these. The neural network will still generate follow-on text, but because the questions are tricky or incorrect, it’s less likely to be correct.

How can LLMs be used?

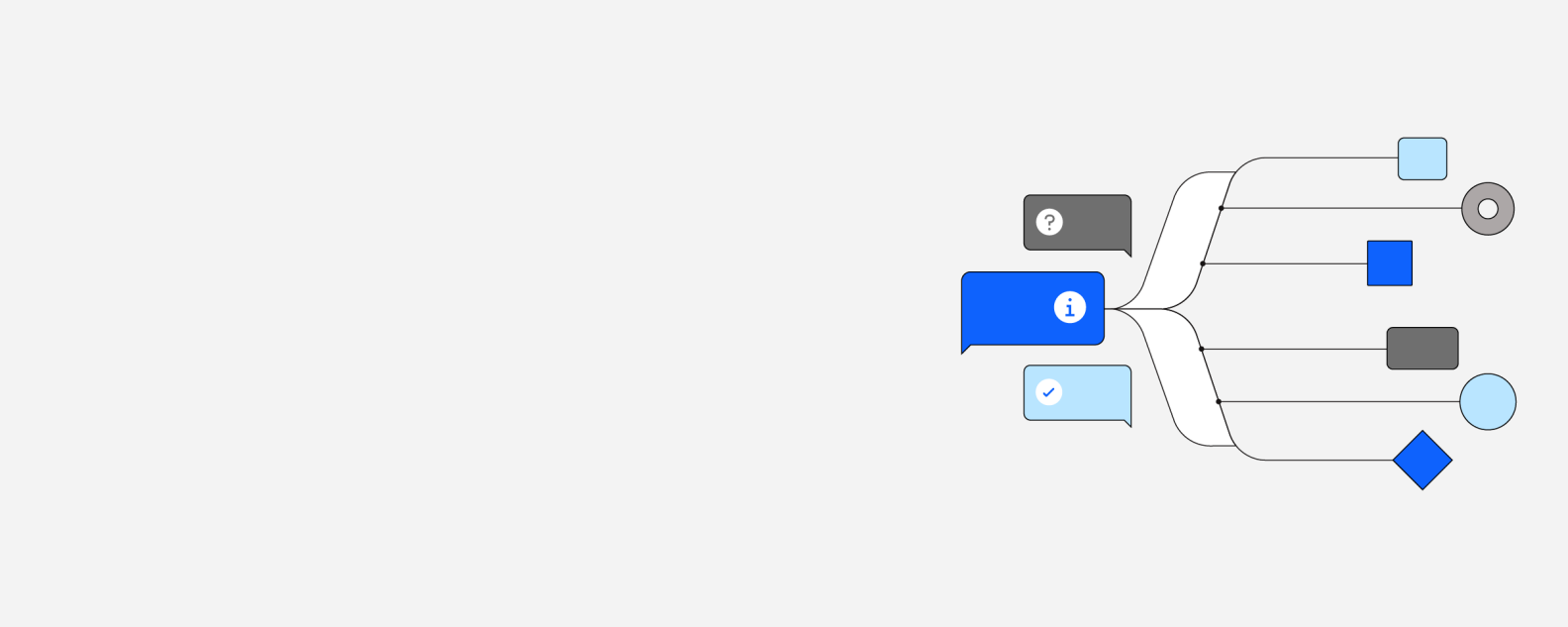

LLMs are powerful mostly because they’re able to be generalized to so many different situations and uses. The same core LLM (sometimes with a bit of fine-tuning) can be used to do dozens of different tasks.

While everything they do is based around generating text, the specific ways they’re prompted to do it changes what features they appear to have.

Here are some of the tasks LLMs are commonly used for:

- General-purpose chatbots (like ChatGPT and Google Bard)

- Customer service chatbots that are trained on your business’s docs and data

- Translating text from one language to another

- Converting text into computer code, or one language into another

- Generating social media posts, blog posts, and other marketing copy

- Sentiment analysis

- Moderating content

- Correcting and editing writing

- Data analysis

And hundreds of other things. We’re only in the early days of the current AI revolution.

But there are also plenty of things that LLMs can’t do, but that other kinds of AI models can. A few examples:

- Interpret images

- Generate images

- Convert files between different formats

- Search the web

- Perform maths and other logical operations

Of course, some LLMs and chatbots appear to do some of these things. But in most cases, there’s another AI service stepping in to assist.

When one model is handling a few different kinds of inputs, it actually stops being considered a large language model and becomes something called a large multimodal model (though, to a certain degree, it’s just semantics).

What are the benefits of Using Open-Source LLMs?

There are several short-term and long-term benefits to choosing open-source LLMs over proprietary LLMs. Here are some compelling reasons:

Enhanced data security

Using proprietary LLMs can pose risks such as data leaks or unauthorised access to sensitive information by the LLM provider. Open-source LLMs give companies full control over their data, enhancing security and privacy.

Cost savings and reduced vendor dependency

Proprietary LLMs often require costly licences. In contrast, open-source LLMs are typically free to use, saving companies money in the long term. However, running LLMs still requires resources, such as cloud services or infrastructure.

Code transparency and model customization

Open-source LLMs provide access to their source code, architecture, and training data. This transparency allows for scrutiny and customization, enabling companies to tailor the models to their needs.

Active community support

The open-source community fosters innovation by democratising access to LLM and generative AI technologies. Developers can contribute to improving models, reducing biases, and enhancing accuracy and performance.

Addressing the environmental impact of AI

The environmental footprint of LLMs, including carbon emissions and water consumption, is a growing concern. Open-source LLMs provide researchers with data on resource requirements, enabling them to develop strategies to reduce AI’s environmental impact.

Top 6 LLMs To Use: Our Picks

Here are our top picks:

LLaMA 2

Most top players in the LLM (Large Language Model) space have chosen to develop their models behind closed doors. However, Meta is taking a different approach.

With the release of its powerful, open-source Large Language Model Meta AI (LLaMA) and its upgraded version (LLaMA 2), Meta is making a significant impact in the market.

Launched in July 2023 for research and commercial use, LLaMA 2 is a pre-trained generative text model with 7 to 70 billion parameters. It has been fine-tuned using Reinforcement Learning from Human Feedback (RLHF).

This model can be used as a chatbot and can be adapted for various natural language generation tasks, including programming. Meta has already introduced two open, customised versions of LLaMA 2, called Llama Chat and Code Llama.

BLOOM

Launched in 2022 after a year-long collaborative project involving volunteers from 70+ countries and researchers from Hugging Face, BLOOM is an autoregressive LLM trained to continue text from a prompt using vast amounts of text data and industrial-scale computational resources.

BLOOM’s release marked an important step in democratising generative AI. With 176 billion parameters, BLOOM is one of the most powerful open-source LLMs, capable of providing coherent and accurate text in 46 languages and 13 programming languages.

Transparency is a key feature of BLOOM. The project allows anyone to access the source code and training data to run, study, and improve the model. BLOOM can be used for free through the Hugging Face ecosystem.

BERT

The foundation of LLMs is a neural architecture called a transformer, developed in 2017 by Google researchers in the paper “Attention is All You Need.” One of the first models to demonstrate the potential of transformers was BERT (Bidirectional Encoder Representations from Transformers).

Launched in 2018 as an open-source LLM, BERT quickly achieved state-of-the-art performance in many natural language processing tasks.

Due to its innovative features and open-source nature, BERT has become one of the most popular and widely used LLMs. For example, in 2020, Google announced that it had integrated BERT into Google Search in over 70 languages.

There are currently thousands of open-source, free, and pre-trained BERT models available for specific use cases such as sentiment analysis, clinical note analysis, and toxic comment detection.

Falcon 180B

If the Falcon 40B impressed the open-source LLM community (ranking #1 on Hugging Face’s leaderboard for open-source large language models), the new Falcon 180B indicates that the gap between proprietary and open-source LLMs is quickly narrowing.

Released by the Technology Innovation Institute of the United Arab Emirates in September 2023, Falcon 180B is being trained on 180 billion parameters and 3.5 trillion tokens.

With this impressive computing power, Falcon 180B has already outperformed LLaMA 2 and GPT-3.5 in various NLP tasks, and Hugging Face suggests it can rival Google’s PaLM 2, the LLM that powers Google Bard.

Although free for commercial and research use, Falcon 180B requires significant computing resources to function.

OPT-175B

The release of the Open Pre-trained Transformers Language Models (OPT) in 2022 marked another significant milestone in Meta’s strategy to advance the LLM field through open source.

OPT includes a suite of decoder-only pre-trained transformers ranging from 125M to 175B parameters. OPT-175B, one of the most advanced open-source LLMs on the market, is the most powerful model, with performance similar to GPT-3. Both pre-trained models and source code are available to the public.

However, if you plan to develop an AI-driven company with LLMs, you should consider another model, as OPT-175B is released under a non-commercial license, allowing only research use cases.

XGen-7B

Salesforce recently joined the LLM race by launching its XGen-7B LLM in July 2023.

Unlike most open-source LLMs, which focus on providing large answers with limited information, XGen-7B aims to support longer context windows.

The most advanced version of XGen (XGen-7B-8K-base) allows for an 8K context window, meaning it can process larger amounts of input and output text.

Efficiency is another priority in XGen, which uses only 7B parameters for training, significantly fewer than other powerful open-source LLMs like LLaMA 2 or Falcon.

Despite its relatively small size, XGen can still deliver excellent results. The model is available for commercial and research purposes, except for the XGen-7B-{4K,8K}-inst variant, which is trained on instructional data and RLHF and is released under a noncommercial licence.

GPT-NeoX and GPT-J

Developed by researchers from EleutherAI, a non-profit AI research lab, GPT-NeoX and GPT-J are two great open-source alternatives to GPT.

GPT-NeoX has 20 billion parameters, while GPT-J has 6 billion parameters. Although most advanced LLMs can be trained with over 100 billion parameters, these two LLMs can still deliver results with high accuracy.

They have been trained with 22 high-quality datasets from a diverse set of sources that enable their use in multiple domains and many use cases. Unlike GPT-3, GPT-NeoX and GPT-J have not been trained with RLHF.

Any natural language processing task can be performed with GPT-NeoX and GPT-J, from text generation and sentiment analysis to research and marketing campaign development.

Both LLMs are available for free through the NLP Cloud API.

Why are there so many LLMs?

Until a year or two back, LLMs were limited to research labs and tech demos at AI conferences. Now, they’re powering countless apps and chatbots, and there are hundreds of different models available that you can run yourself (if you have the computer skills). How did we get here?

Well, there are a few factors in play. Some of the big ones are:

- With GPT-3 and ChatGPT, OpenAI demonstrated that AI research had reached the point where it could be used to build practical tools—so lots of other companies started doing the same.

- LLMs take a lot of computing power to train, but it can be done in a matter of weeks or months.

- There are lots of open source models that can be retrained or adapted into new models without the need to develop a whole new model.

- There’s a lot of money being thrown at AI companies, so there are big incentives for anyone with the skills and knowledge to develop any kind of LLM to do so.

Factors To Consider When Choosing The Right LLM

The open-source LLM space is expanding rapidly, with more options available than proprietary models. To select the right open-source LLM for your needs, consider the following factors:

- Purpose: Determine what you want to achieve with the LLM. Some open-source LLMs are only for research, so if you plan to use it for commercial purposes, check the licensing restrictions.

- Necessity: Consider if you really need an LLM for your project. While LLMs offer many possibilities, they are not always essential. If you can achieve your goals without an LLM, you might save money and resources.

- Accuracy: The size of an LLM affects its accuracy. Larger models like LLaMA or Falcon tend to be more accurate but require more resources to train and operate.

- Cost: Operating larger LLMs can be expensive due to the resources needed for training and operation. Consider your budget and the resources available before choosing an LLM.

- Pre-trained Models: Check if a pre-trained model fits your needs before considering training your own LLM. There are many open-source pre-trained LLMs available for specific use cases.

7 Best LLMs To Use in 2024: The Future

I think we’re going to see a lot more LLMs in the near future, especially from major tech companies. Amazon, IBM, Intel, and NVIDIA all have LLMs under development, in testing, or available for customers to use.

They’re not as buzzy as the models I listed above, nor are regular people ever likely to use them directly, but I think it’s reasonable to expect large enterprises to start deploying them widely.

I also think we’re going to see a lot more efficient LLMs tailored to run on smartphones and other lightweight devices. Google has already hinted at this with Gemini Nano, which runs some features on the Google Pixel Pro 8. Developments like Mistral’s Mixtral 8x7B demonstrate techniques that enable smaller LLMs to compete with larger ones efficiently.

The other big thing that’s coming is large multimodal models or LMMs. These combine text generation with other modalities, like images and audio, so you can ask a chatbot what’s going on in an image or have it respond with audio. GPT-4 Vision (GPT-4V) and Google’s Gemini models are two of the first LMMs that are likely to be widely deployed, but we’re definitely going to see more.

Other than that, who can tell? Three years ago, I definitely didn’t think we’d have powerful AIs like ChatGPT available for free. Maybe in a few years, we’ll have artificial general intelligence (AGI). So, if you want to learn the LLMs, join atomcamp’s Data Science and AI bootcamp to learn the AI skills needed for this job market.