Data Engineering Bootcamp

- Online

- 4th January

- 4 Months

- Friday, 7pm - 9pm

- Saturday & Sunday, 12pm - 2pm

Course Description

The high demand of data engineering, both locally and internationally, has prompted atomcamp x data pilot to launch its data engineering bootcamp. This Bootcamp is project and offers hands-on exercises in tools like Hadoop, Apache Kafka, and AWS.

In our 4-month Data Engineering bootcamp, you’ll go from mastering the basics like data pipelines and ETL, to specialized skills in Big Data and cloud computing. Each week tackles a critical aspect of data engineering. The course culminates in a capstone project, ensuring you’re fully equipped to launch a global career in creating and managing sophisticated data architectures.

Course Curriculum

- Overview of Data Engineering and its Role in the Data Lifecycle

- Understanding Data Pipelines and ETL (Extract, Transform, Load) Processes

- Introduction to Data Modeling and Data Integration Tools

- Setting Up the Development Environment and Tools for Data Engineering

- Understanding CDC (Change Data Capture) and SCD (Slowly Changing Dimensions)

- Hands-On Exercises on Implementing CDC and SCD

- Concepts of Data Modeling and Schema Design

- Relational Database Management Systems (RDBMS), SQL, and ACID Properties

- Introduction to Scoped Source Systems and Identifying Key Business Functions

- Understanding Data Warehousing Concepts and Architecture

- Introduction to Dimensional Modeling, Snowflake, Star Schema, Data Vault Design, and Data Marts

- Overview of Data Warehouse Technologies (e.g., Snowflake, Redshift, BigQuery)

- Data Modeling for Various Data Architectures

- Hands-On Exercises on Building Data Warehouses and Data Marts

- Techniques for Data Integration and Data Quality Assurance

- Data Extraction from Various Sources (Databases, APIs, Web Scraping)

- Data Transformation and Cleansing Using ETL Tools (Python, Fivetran, Dataddo, Talend, Informatica)

- Hands-On Exercises on Data Integration and Transformation

- Process of Cleaning, Restructuring, and Enriching Raw Data

- Handling Missing Values, Duplicates, Correcting Data Types, and Standardizing Data

- Reshaping, Pivoting, and Aggregating Data

- Combining Multiple Datasets Efficiently

- Parsing JSON, XML, and Other Unstructured Data Formats

- Hands-On Exercises on Data Wrangling Using SQL and Python

- Overview of Cloud Computing Platforms (e.g., AWS, Azure, GCP)

- Cloud-Based Data Storage and Processing Services (e.g., S3, Redshift, BigQuery)

- Leveraging Cloud Platforms for Scalable and Efficient Data Engineering

- Hands-On Exercises on Deploying Data Engineering Solutions on the Cloud Using AWS Lambda, GCP Cloud Functions, Pub/Sub, Azure Data Factory, and Databricks

- Introduction to Real-Time Data Streaming and Event-Driven Architectures

- Processing Streaming Data Using Tools Like Apache Kafka

- Real-Time Analytics Using Technologies Like Apache Spark Streaming or Apache Flink

- Hands-On Exercises on Working with Streaming Data and Real-Time Analytics

- Introduction to Data Governance and Its Importance in Data Engineering

- Implementing Data Access Controls and Encryption Techniques

- Hands-On Exercises on Data Governance and Security Practices Using Open Metadata

- Workflow Orchestration Tools (e.g., Apache Airflow, Mage AI)

- Building and Managing Data Pipelines Using Workflow Automation

- Comparison of Scheduling Techniques Across AWS, GCP, Azure, and Databricks

- Monitoring and Error Handling in Data Engineering Workflows

- Hands-On Exercises on Workflow Orchestration and Automation

- Techniques for Optimizing Data Processing Performance

- Indexing Strategies for Relational and NoSQL Databases

- Scaling Data Pipelines and Distributed Computing for Efficient Processing

- Hands-On Exercises on Performance Optimization and Scalability

- Data Engineering Project Incorporating All Concepts Learned

- Guidance and Mentorship Provided by the Instructor

- Project Presentation and Evaluation

STANDARD MONTHLY

-

For 4 Months

LUMPSUM

-

For 4 Months

Pre-requisites for Bootcamp:

- Computer Science background

- Database and Data Warehousing background is a plus

- Python, basic programming skills

Trusted by leading companies

Complete our Bootcamp and Become Job Ready

We help our successful graduates find Jobs and Internships, and also provide guidance in discovering Freelance opportunities.

Learning Objectives

-

Foundations of Data Engineering: Participants will gain knowledge of the role of data engineering and will be able to differentiate between data pipelines, ETL processes, and their significance.

-

Data Technologies and Tools: Participants will be able to navigate big data technologies, including Hadoop ecosystem components, and implement data integration, transformation, and extraction techniques.

-

Data Warehousing and Architecture: Participants will be able to comprehend data warehousing architecture and develop data models.They will gain practical experience with data warehouse technologies and dimensional modeling.

-

Real-time Data Processing and Cloud Computing: Participants will explore real-time data streaming and event-driven architectures and utilize cloud computing platforms for storage, processing, and analytics.They will implement data access controls and security measures.

-

Workflow Automation and Impactful Insights: Participants will employ workflow orchestration tools for data pipeline automation, create impactful data visualizations using industry-standard tools and optimize performance and scalability of data processing pipelines.

Key Features

Industry Ready Portfolio

Craft a portfolio that showcases your industry-ready data science projects.

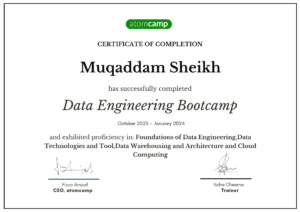

Earn a Verified Certificate of Completion

Earn a data engineering certificate, verifying your skills. Step into the market with a proven and trusted skillset.

Pricing Plans

STANDARD MONTHLY

-

Standard Monthly

-

For 4 Months

LUMPSUM PAYMENT

-

Lumpsum (Advance Payment)

-

For 4 Months